Pre-requisites

- Docker

- Basics of Kubernetes(POD’s, Services, Deployments)

- YAML

- Linux Command line and setting up Linux Machine

Certified Kubernetes Administrator

- Kubernetes Certification is hands-on

- Exam Details

- Exam Cost: $300(One Free Re-take within Next 12 month)

- Online Exam

- Exam Duration: 3hr(~24 Question)

- The version of Kubernetes running in the exam environment: v1.16

- Passing score: 74%

- Resources allowed during the exam:

https://github.com/kubernetes/

Useful Resources

Certified Kubernetes Administrator: https://www.cncf.io/certification/cka/

Exam Curriculum (Topics): https://github.com/cncf/curriculum

Candidate Handbook: https://www.cncf.io/certification/candidate-handbook

Exam Tips: http://training.linuxfoundation.org/go//Important-Tips-CKA-CKAD

FAQ: https://training.linuxfoundation.org/wp-content/uploads/2019/11/CKA-CKAD-FAQ-11.22.19.pdf

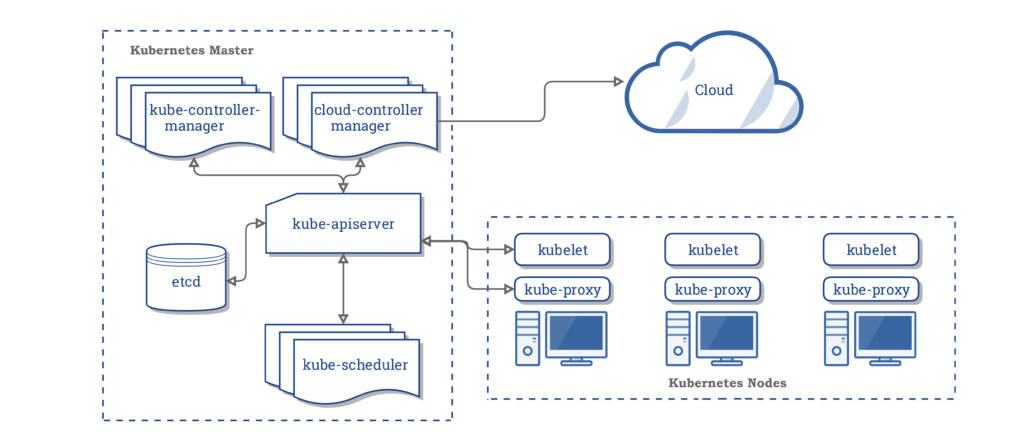

Kubernetes Cluster Architecture

What is Kubernetes?

Official Definition

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

Kubernets comes from the Greek word meaning Helmsman – the person who steers a ship. The theme is reflected in the logo.

https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/

https://kubernetes.io/docs/concepts/overview/components/

At the high-level overview, we have two major components

- Kubernetes Master: Manage, Plan, Schedule, and monitor nodes

- Kubernetes Nodes: Host application as containers

Other Components:

ETCD

- Think of it like Database which stores cluster information(Nodes, PODs, Configs, Secrets, Accounts, Roles, Bindings,…) in key-value format about the cluster.

- Listen on Port 2379 by default.

- Every information we see when we run kubectl get command is from ETCD server.

- Every change we are doing to our cluster eg: Deploying additional nodes, pods , replica sets are updated in etcd server.

- Only when its updated in etcd change is considered to be complete.

- Deployed via kubeadm(kubectl get pods -n kube-system –> etcd-master).

- kubectl exec etcd-master -n kube-system etcdctl get / –prefix -keys-only.

- Use the RAFT protocol.

$ kubectl get pods -n kube-system |grep -i etcd

etcd-plakhera11.example.com 1/1 Running 4 9dkube-scheduler

Identify the right node to place a container depending on the worker node capacity, taints or toleration.

- The scheduler is only responsible for deciding which POD goes on which node, it doesn’t actually place the pod on the node it’s the job of the kubelet.

- The scheduler is only responsible for deciding which POD goes on which node, it doesn’t actually place the pod on the node it’s the job of the kubelet.

- The way scheduler places pod on nodes

Filter Nodes: Requirement raised by POD

Rank Nodes: Depending upon the CPU and Memory

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-scheduler-plakhera11c.mylabserver.com 1/1 Running 5 11d- Or you can check the running process

$ ps aux|grep -i kube-scheduler

root 2019 0.7 0.4 139596 34428 ? Ssl 01:42 0:22 kube-scheduler --address=127.0.0.1 --kubeconfig=/etc/kubernetes/scheduler.conf --leader-elect=trueController Manager

- Manages various controller in Kubernetes

1: Replication Controller: It monitors the status of replicasets, to make sure the desired number of pods always running in the set. If pod dies it creates another one.

2: Node Controller:

- It monitors the status of nodes every 5s

- Node monitor grace period = 40s

- POD eviction timeout = 5m

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-controller-manager-plakhera11c.mylabserver.com 1/1 Running 5 11d- To see the effective process

$ ps aux|grep -i kube-controller-manager

root 2095 2.3 1.1 205516 93028 ? Ssl 01:42 0:56 kube-controller-manager --address=127.0.0.1 --allocate-node-cidrs=true --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf --client-ca-file=/etc/kubernetes/pki/ca.crt --cluster-cidr=10.244.0.0/16 --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt --cluster-signing-key-file=/etc/kubernetes/pki/ca.key --controllers=*,bootstrapsigner,tokencleaner --kubeconfig=/etc/kubernetes/controller-manager.conf --leader-elect=true --node-cidr-mask-size=24 --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --root-ca-file=/etc/kubernetes/pki/ca.crt --service-account-private-key-file=/etc/kubernetes/pki/sa.key --use-service-account-credentials=true

cloud_u+ 2263 0.0 0.0 14988 2652 pts/1 R+ 02:22 0:00 grep --color=auto -i kube-controller-managerKube ApiServer

- Is responsible for how these components talk to each other. It exposes Kube Api which is used by an external user to perform cluster operation.

- When we run any kubectl command(eg: kubectl get nodes) behind the scene

kubectl --> kube-apiserver(authenticate and validate the request) --> ETCD Cluster(retrieve data) --> Response back with the requested data- kubeApi Server is the only component that interacts directly with ETCD datastore.

- If installed via kubeadm(kubectl get pods -n kube-system)

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-apiserver-plakhera11.example.com 1/1 Running 5 11d- OR you can check the process

$ ps aux|grep -i kube-api

root 2125 2.9 3.2 446884 261124 ? Ssl 01:42 0:58 kube-apiserver --authorization-mode=Node,RBAC --advertise-address=172.31.99.206 --allow-privileged=true --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --insecure-port=0 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key- Container Runtime Engine: eg: Docker or Rkt(Rocket)

Kubelet

- Is an agent that runs on each node in the cluster, it listens for instruction from KubeApi Server and deploys/destroy node on the cluster. KubeApi server periodically fetches status reports from kubelet to monitor the status of the node and docker. It registers the node to the Kubernetes cluster.

NOTE: Kubeadm doesn’t deploy Kubelets.

To check the status of kubelet agent

$ systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2020-01-27 01:41:58 UTC; 54min ago

Docs: https://kubernetes.io/docs/home/

Main PID: 785 (kubelet)

Tasks: 17 (limit: 2318)

CGroup: /system.slice/kubelet.service

└─785 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --cgroup-driver=cgroupfs --n

OR

$ ps aux|grep -i kubelet

root 785 2.3 4.2 1345380 85736 ? Ssl 01:41 1:17 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/libkubelet/config.yaml --cgroup-driver=cgroupfs --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.1 --resolv-conf=/run/systemd/resolve/resolv.confKube-proxy

- Think of it like POD Network, how POD in a cluster can communicate with each other.

- It make sure each nodes gets its own unique IP address and implement local IPTABLES or IPVS rules to handle routing and load balancing of traffic on the Pod network.

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-proxy-4lvxx 1/1 Running 4 11d

kube-proxy-7w6p4 1/1 Running 4 11d

kube-proxy-tfrwv 1/1 Running 5 11dPOD

- Containers are encapsulated in the form of Kubernetes objects known as POD. Pod itself doesn’t actually run anything, it’s just a sandbox for hosting containers.

- In other terms it provides the share execution environment with has a set of resources that are shared by every container that is the part of POD(eg: IP addresses, ports, hostnames, sockets, memory, volumes etc)

- A POD is a single instance of an application, it’s the smallest object we can create in kubernetes. You cannot run a container directly on a Kubernetes cluster- containers must always run inside the Pods.

- To see the list of pods

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7cdbd8cdc9-tzbk6 1/1 Running 1 3d22hCreating a POD using YAML file

- Kubernetes uses yaml file as an input to create object like POD, ReplicaSet, Deployments etc.

- This yaml file is then POST to the API server.

- API Server examines the file, write it to etcd store and then scheduler deploys it to the healthy node with enough available resources.

- YAML file always contains these four top level fields

apiVersion:

kind:

metadata:

spec:- apiVersion: Version of kubernetes api we use to create an object, depending upon the type of object we are trying to create.

- Think of version field as defining the schema, newer is usually better.

| Kind | Version |

| POD | v1 |

| Service | v1 |

| ReplicaSet/Deployment | apps/v1 |

- kind: Refer to the type of object we are trying to create in this case Pod. Other possible values are Service, ReplicaSet, Deployment.

- metadata: Refer to the data about the object

metadata:

name: mytest-pod

labels:

app: mytestappIn the above example, the name of our pod is mytest-pod(which is a string) and then we are assigning labels to it which is a dictionary and it can be any key-value pairs.

- Spec: Now we are going to specify the container or image we need in the POD.

spec:

containers:

- name: mynginx-container

image: nginx In the above example we are giving our container a name mynginx container and asking it to pull the image from dockerhub.

Note (-) in the front of name, which indicate it’s a list and we can specify multiple containers here.

Once we have the file ready we can deploy pod using

kubectl create -f <filename>.yml- Once the pod is create you can verify it using

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-test-pod 1/1 Running 0 3m10s

- You can add –watch flag to the kubectl get pods command so that you can monitor it.

- -o wide flag gives couple of more columns(NOTE I am showing a different example here)

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

frontend-6cww6 1/1 Running 8 10d 10.244.2.99 plakhera13c.mylabserver.com <none> <none>

frontend-dw5s4 1/1 Running 8 10d 10.244.1.65 plakhera12c.mylabserver.com <none> <none>

- -o yaml flag , returns a full copy of the Pod manifests from the cluster store. The output is divided into two parts

- The desired state (.spec section)

- The current observed state(.status section)

$ kubectl get pods my-test-pod -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2020-01-27T04:51:59Z"

name: my-test-pod

namespace: default

resourceVersion: "302557"

selfLink: /api/v1/namespaces/default/pods/my-test-pod

uid: c0a48e63-40c0-11ea-8152-06e53f8e1eee

spec:

containers:

- image: nginx

imagePullPolicy: Always

name: my-test-pod

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-s9cz4

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: plakhera13c.mylabserver.com

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-s9cz4

secret:

defaultMode: 420

secretName: default-token-s9cz4

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2020-01-27T04:51:59Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2020-02-07T03:19:36Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2020-02-07T03:19:36Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2020-01-27T04:51:59Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://8b82b56654223e400c8f3f6709dd2636e4e0f6602eeb699e793ca57ee65942d8

image: nginx:latest

imageID: docker-pullable://nginx@sha256:ad5552c786f128e389a0263104ae39f3d3c7895579d45ae716f528185b36bc6f

lastState:

terminated:

containerID: docker://74dd6acef0394c71285cd8cd0eb0afbe1fe0fd5b2c6c51c56b4c8ef3eae5bdcd

exitCode: 0

finishedAt: "2020-02-04T18:16:08Z"

reason: Completed

startedAt: "2020-02-04T14:17:31Z"

name: my-test-pod

ready: true

restartCount: 9

state:

running:

startedAt: "2020-02-07T03:19:35Z"

hostIP: 172.31.98.89

phase: Running

podIP: 10.244.2.101

qosClass: BestEffort

startTime: "2020-01-27T04:51:59Z"- But my pod manifest is just 8 lines long, but the output is more than 8 lines, now the question is from where these extra information comes?

- Two main sources

- Kubernetes pod object has far more properties than what we defined in the manifests. What we dont set explicitly are automatically expanded with default values by Kubernetes.

- As mentioned above, here we are getting Pod current observed state as well its desired state.

- Another great command to get the detailed information about POD

$ kubectl describe pod my-test-pod

Name: my-test-pod

Namespace: default

Priority: 0

PriorityClassName: <none>

Node: plakhera13c.mylabserver.com/172.31.98.89

Start Time: Mon, 27 Jan 2020 04:51:59 +0000

Labels: <none>

Annotations: <none>

Status: Running

IP: 10.244.2.12

Containers:

my-test-pod:

Container ID: docker://e83d9d4dc1a1f01de04a1ea4eae834c6978b1a607eb47950e2862c353dc6a22e

Image: nginx

Image ID: docker-pullable://nginx@sha256:70821e443be75ea38bdf52a974fd2271babd5875b2b1964f05025981c75a6717

Port: <none>

Host Port: <none>

State: Running

Started: Mon, 27 Jan 2020 04:52:01 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-s9cz4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-s9cz4:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-s9cz4

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 7m10s default-scheduler Successfully assigned default/my-test-pod to plakhera13c.mylabserver.com

Normal Pulling 7m9s kubelet, plakhera13c.mylabserver.com pulling image "nginx"

Normal Pulled 7m8s kubelet, plakhera13c.mylabserver.com Successfully pulled image "nginx"

Normal Created 7m8s kubelet, plakhera13c.mylabserver.com Created container

Normal Started 7m8s kubelet, plakhera13c.mylabserver.com Started container- Complete pod.yml file

$ cat pod.yml

apiVersion: v1

kind: Pod

metadata:

name: my-test-pod

spec:

containers:

- name: my-test-pod

image: nginx- To login into containers running in Pods use kubectl exec

$ kubectl exec -it my-test-pod sh

# - To delete a Pod

$ kubectl delete pod my-test-pod

pod "my-test-pod" deletedPlease join me with my journey by following any of the below links

- Website: https://100daysofdevops.com/

- Twitter: @100daysofdevops OR @lakhera2015

- Facebook: https://www.facebook.com/groups/795382630808645/

- Medium: https://medium.com/@devopslearning

- GitHub: https://github.com/100daysofdevops/100daysofdevops

- Slack: https://join.slack.com/t/100daysofdevops/shared_invite/enQtODQ4OTUxMTYxMzc5LTYxZjBkNGE3ZjE0OTE3OGFjMDUxZTBjNDZlMDVhNmIyZWNiZDhjMTM1YmI4MTkxZTQwNzcyMDE0YmYxYjMyMDM

- YouTube Channel: https://www.youtube.com/user/laprashant/videos?view_as=subscriber

- Meetup: https://www.meetup.com/100daysofdevops/