- To see more info about Swarm

$ docker info Client: Debug Mode: false Server: Containers: 0 Running: 0 Paused: 0 Stopped: 0 Images: 0 Server Version: 19.03.4 Storage Driver: devicemapper Pool Name: docker-259:1-67129954-pool Pool Blocksize: 65.54kB Base Device Size: 10.74GB Backing Filesystem: xfs Udev Sync Supported: true Data file: /dev/loop0 Metadata file: /dev/loop1 Data loop file: /var/lib/docker/devicemapper/devicemapper/data Metadata loop file: /var/lib/docker/devicemapper/devicemapper/metadata Data Space Used: 11.73MB Data Space Total: 107.4GB Data Space Available: 13.43GB Metadata Space Used: 17.36MB Metadata Space Total: 2.147GB Metadata Space Available: 2.13GB Thin Pool Minimum Free Space: 10.74GB Deferred Removal Enabled: true Deferred Deletion Enabled: true Deferred Deleted Device Count: 0 Library Version: 1.02.158-RHEL7 (2019-05-13) Logging Driver: json-file Cgroup Driver: cgroupfs Plugins: Volume: local Network: bridge host ipvlan macvlan null overlay Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog Swarm: active NodeID: w45sgx9gjpijtrq445avrd9wt Is Manager: true ClusterID: xz9d4b2z4we24wcg7j0e7zng4 Managers: 1 Nodes: 3 Default Address Pool: 10.0.0.0/8 SubnetSize: 24 Data Path Port: 4789 Orchestration: Task History Retention Limit: 5 Raft: Snapshot Interval: 10000 Number of Old Snapshots to Retain: 0 Heartbeat Tick: 1 Election Tick: 10 Dispatcher: Heartbeat Period: 5 seconds CA Configuration: Expiry Duration: 3 months Force Rotate: 0 Autolock Managers: false Root Rotation In Progress: false Node Address: 172.31.21.46 Manager Addresses: 172.31.21.46:2377 Runtimes: runc Default Runtime: runc Init Binary: docker-init containerd version: b34a5c8af56e510852c35414db4c1f4fa6172339 runc version: 3e425f80a8c931f88e6d94a8c831b9d5aa481657 init version: fec3683 Security Options: seccomp Profile: default Kernel Version: 3.10.0-1062.1.2.el7.x86_64 Operating System: CentOS Linux 7 (Core) OSType: linux Architecture: x86_64 CPUs: 2 Total Memory: 1.758GiB Name: plakhera12c.mylabserver.com ID: F4RB:NVBA:5ABO:CRPA:K3HA:DPAG:RHX5:KXRK:NXP2:JNIG:YM46:QOYP Docker Root Dir: /var/lib/docker Debug Mode: false Registry: https://index.docker.io/v1/ Labels: Experimental: false Insecure Registries: 127.0.0.0/8 Live Restore Enabled: false

Service

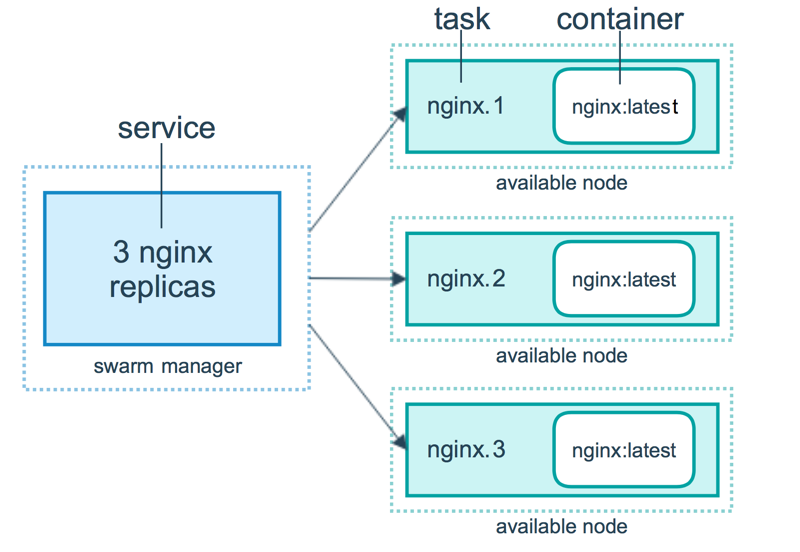

- To deploy an application image when Docker Engine is in swarm mode, you create a service.

- When you deploy the service to the swarm, the swarm manager accepts your service definition as the desired state for the service. Then it schedules the service on nodes in the swarm as one or more replica tasks. The tasks run independently of each other on nodes in the swarm.

$ docker service create --name mytestswarmserver --replicas 1 nginx

wgsg0ehf45kxe6fzit4nt50ey

overall progress: 0 out of 1 tasks

overall progress: 0 out of 1 tasks

overall progress: 1 out of 1 tasks

1/1: running [==================================================>] - The

docker service createcommand creates the service. - The

--nameflag names the service mytestswarmserver. - The

--replicasflag specifies the desired state of 1 running instance. - The arguments

nginxdefine the service as an nginx Linux container

Run docker service ls to see the list of running services:

$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

wgsg0ehf45kx mytestswarmserver replicated 1/1 nginx:latest The following command shows all the tasks that are part of the mytestswarmserver service:

$ docker service ps mytestswarmserver

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

0q0a4qt7p76q mytestswarmserver.1 nginx:latest plakhera12c.mylabserver.com Running Running 10 minutes ago - As you can see that currently, service is running on plakhera12c.mylabserver.com

- You can also verify that nginx container is up and running on plakhera12c.mylabserver.com by using

$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

208d1a371e9a nginx:latest "nginx -g 'daemon of…" 15 minutes ago Up 15 minutes 80/tcp mytestswarmserver.1.0q0a4qt7p76q38sew5u8thiuy- Let’s do some experiment, let stop the nginx container on plakhera12 machine

$ docker stop 208d1a371e9a

208d1a371e9a- Let see the swarm magic here, it automatically brings up the service on plakhera13c.mylabserver.com

$ docker service ps wgsg0ehf45kx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

pivgm8nsupi6 mytestswarmserver.1 nginx:latest plakhera13c.mylabserver.com Ready Preparing 2 seconds ago

k95zl8mmb5c1 \_ mytestswarmserver.1 nginx:latest plakhera12c.mylabserver.com Shutdown Complete about a minute ago

- Some more testing, let login to plakhera13c.mylabserver.com and shutdown docker daemon itself

$ sudo systemctl stop docker- Ready for one more swarm magic, it again brings up the service on plakhera12c.mylabserver.com without your intervention

$ docker service ps wgsg0ehf45kx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

o5f7vz5e9bjx mytestswarmserver.1 nginx:latest plakhera12c.mylabserver.com Running Running 4 seconds ago

pivgm8nsupi6 \_ mytestswarmserver.1 nginx:latest plakhera13c.mylabserver.com Shutdown Running 23 seconds ago

k95zl8mmb5c1 \_ mytestswarmserver.1 nginx:latest plakhera12c.mylabserver.com Shutdown Complete 5 minutes ago - Scaling Swarm Service: The scale command enables you to scale one or more replicated services either up or down to the desired number of replicas

$ docker service scale mytestswarmserver=5

mytestswarmserver scaled to 5

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged - If you again check the list of tasks for that particular service

$ docker service ps mytestswarmserver

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

o5f7vz5e9bjx mytestswarmserver.1 nginx:latest plakhera12c.mylabserver.com Running Running 2 hours ago plakhera14c.mylabserver.com Running Running 2 minutes ago

9hond3nsebry mytestswarmserver.3 nginx:latest plakhera14c.mylabserver.com Running Running 2 minutes ago

q27r94oby3mb mytestswarmserver.4 nginx:latest plakhera12c.mylabserver.com Running Running 2 minutes ago

36wwuepguejo mytestswarmserver.5 nginx:latest plakhera14c.mylabserver.com Running Running 2 minutes ago

- The same way we scale up service, we can scale it down

$ docker service scale mytestswarmserver=3

mytestswarmserver scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - Similar to scale, we have update command

$ docker service update --replicas 4 mytestswarmserver

mytestswarmserver

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged - But there is one subtle difference between scale and update

- To understand that, let’s create one more service

$ docker service create --name mytestswarmserver01 --replicas 2 nginx

x6wdo9c8nzi42xr58bqd9ma7o

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged - Using Docker service create you can scale multiple services

$ docker service scale mytestswarmserver=5 mytestswarmserver01=3

mytestswarmserver scaled to 5

mytestswarmserver01 scaled to 3

overall progress: 5 out of 5 tasks

1/5: running [==================================================>]

2/5: running [==================================================>]

3/5: running [==================================================>]

4/5: running [==================================================>]

5/5: running [==================================================>]

verify: Service converged

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged

- But using update you can only scale one service at a time

$ docker service update --replicas 6 mytestswarmserver mytestswarmserver01

"docker service update" requires exactly 1 argument.

See 'docker service update --help'.

Usage: docker service update [OPTIONS] SERVICE

Update a serviceReplicated Services/Global Services

So far, in the replicated services model, the swarm manager distributes a specific number of replica tasks among the nodes based upon the scale you set in the desired state.

For global services, the swarm runs one task for the service on every available node in the cluster. Let say we have monitoring service whose daemon needs to be installed in each swarm node

$ docker service create --name myglobal --mode global nginx

juqr8xuetjh12wfbhytbcu4ds

overall progress: 3 out of 3 tasks

x1v3x9ejsnpz: running [==================================================>]

w45sgx9gjpij: running [==================================================>]

b5lyurguajin: running [==================================================>]

verify: Service converged - To verify it

$ docker service ps myglobal

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

d3mr45xdeqen myglobal.x1v3x9ejsnpzgateb9xhbi431 nginx:latest plakhera14c.mylabserver.com Running Running 3 minutes ago

jzk96ycyp2fd myglobal.w45sgx9gjpijtrq445avrd9wt nginx:latest plakhera12c.mylabserver.com Running Running 3 minutes ago

y1p3lri0h1w0 myglobal.b5lyurguajincw1l9see0etr7 nginx:latest plakhera13c.mylabserver.com Running Running 3 minutes ago - Draining Swarm Node

- Sometimes, such as planned maintenance times, you need to set a node to

DRAINavailability.DRAINavailability prevents a node from receiving new tasks from the swarm manager. It also means the manager stops tasks running on the node and launches replica tasks on a node withACTIVEavailability.

$ docker node update --availability drain plakhera14c.mylabserver.com

plakhera14c.mylabserver.com- To check the status

$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w45sgx9gjpijtrq445avrd9wt * plakhera12c.mylabserver.com Ready Active Leader 19.03.4

b5lyurguajincw1l9see0etr7 plakhera13c.mylabserver.com Ready Active 19.03.4

x1v3x9ejsnpzgateb9xhbi431 plakhera14c.mylabserver.com Ready Drain <--- 19.03.4- To put the node back in active state

$ docker node update --availability active plakhera14c.mylabserver.com

plakhera14c.mylabserver.com- To check the status

$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

w45sgx9gjpijtrq445avrd9wt * plakhera12c.mylabserver.com Ready Active Leader 19.03.4

b5lyurguajincw1l9see0etr7 plakhera13c.mylabserver.com Ready Active 19.03.4

x1v3x9ejsnpzgateb9xhbi431 plakhera14c.mylabserver.com Ready Active 19.03.4Display detailed information on one or more services

$ docker service inspect mytestswarmserver01 --pretty

ID: x6wdo9c8nzi42xr58bqd9ma7o

Name: mytestswarmserver01

Service Mode: Replicated

Replicas: 3

Placement:

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: nginx:latest@sha256:77ebc94e0cec30b20f9056bac1066b09fbdc049401b71850922c63fc0cc1762e

Init: false

Resources:

Endpoint Mode: vip- To get detailed information about swarm node

$ docker node inspect w45sgx9gjpijtrq445avrd9wt --pretty

ID: w45sgx9gjpijtrq445avrd9wt

Hostname: plakhera12c.mylabserver.com

Joined at: 2019-10-20 02:22:41.100359712 +0000 utc

Status:

State: Ready

Availability: Active

Address: 172.31.21.46

Manager Status:

Address: 172.31.21.46:2377

Raft Status: Reachable

Leader: Yes

Platform:

Operating System: linux

Architecture: x86_64

Resources:

CPUs: 2

Memory: 1.758GiB

Plugins:

Log: awslogs, fluentd, gcplogs, gelf, journald, json-file, local, logentries, splunk, syslog

Network: bridge, host, ipvlan, macvlan, null, overlay

Volume: local

Engine Version: 19.03.4

TLS Info:

TrustRoot:

-----BEGIN CERTIFICATE-----

MIIBajCCARCgAwIBAgIUYcja4nW8LxQTgHT55HprKcVObKcwCgYIKoZIzj0EAwIw

EzERMA8GA1UEAxMIc3dhcm0tY2EwHhcNMTkxMDIwMDIxODAwWhcNMzkxMDE1MDIx

ODAwWjATMREwDwYDVQQDEwhzd2FybS1jYTBZMBMGByqGSM49AgEGCCqGSM49AwEH

A0IABLA2Aq+5HrMLbYu7syTQI0oDymK5/NcWHha9wRTM7QIyLa5m56gdCUEsOLsx

ix+Z9GGqktMkXcUNeDPHEIBEqTCjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNVHRMB

Af8EBTADAQH/MB0GA1UdDgQWBBTGdeR1vi2EEMYDUd59DxBNy6eD7zAKBggqhkjO

PQQDAgNIADBFAiAjsJzklv8iM5yQhxjYnz9UD8gJ6uCUim/LS/7YzykhGwIhAObX

z2rDu64/DOldMibzX7rBb/u0W4BTiaHudA+2Xpbs

-----END CERTIFICATE-----

Issuer Subject: MBMxETAPBgNVBAMTCHN3YXJtLWNh

Issuer Public Key: MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAEsDYCr7keswtti7uzJNAjSgPKYrn81xYeFr3BFMztAjItrmbnqB0JQSw4uzGLH5n0YaqS0yRdxQ14M8cQgESpMA==Please follow me with my Journey

- Website:https://100daysofdevops.com/

- Twitter:@100daysofdevops OR @lakhera2015

- Facebook:https://www.facebook.com/groups/795382630808645/

- Medium:https://medium.com/@devopslearning

- GitHub:https://github.com/100daysofdevops/21_Days_of_Docker

This time to make learning more interactive, I am adding

- Slack

- Meetup

Please feel free to join this group.

Slack:

Meetup Group

If you are in the bay area, please join this meetup group https://www.meetup.com/100daysofdevops/